Digital marketers work hard for the website to be ranked on the top pages of Google search. But, if the website isn’t showing in the top search results, then they’re missing out on something. Their efforts go in vain if they don’t make a good website indexation of their pages. Many problems lead to website indexation errors.

But we can never see any statistics that show the most prevalent issues of website indexation.

Indexing 101

Google indexing needs to locate and store them in the language of the layperson. Google can then examine the Google indexing and content to decide for what inquiries. let us identify the major website indexing issues.

Identify Indexing Issues

Many digital marketers ask this question that why doesn’t my website show up on Google search. Your day-to-day activities should be focused on enhancing websites from a technical SEO audit point of view to increase their visibility on Google and thus have access in the Google Search Console to hundreds of sites.

We chose to utilize this to make popular indexing problems for the website not showing in Google search:

1. Methodology For Google Indexing Page

We start by producing a sample of pages that combines data from two sources:

- We’ve used the easily available data from our customers.

- By running Twitter polls and reaching out personally to certain SEOs, we requested other anonymized data from other SEO specialists.

Both have been successful data providers.

2. Excluding Non-Indexable Pages

You are interested in not having some pages indexed. These include obsolete URLs, non-relevant content, e-commerce filter criteria, and more. Google can make sure that webmasters disregard them, including robots.txt and noindex tags, in a variety of methods.

If such pages are taken into account, the quality of our findings would have a detrimental impact hence I cancel pages that fulfill any of the sample criteria:

- Robots.txt blocked

- Noindex marked

- Removed

- Returns the status code of HTTP 404

3. Excluding Non-Valuable Google Indexing Pages

We only evaluated those sites that are included in sitemaps to further increase the quality of the sample.

Of course, in their sitemaps, there are numerous websites with trash. However, in the prior stage, we took care of that. Knowing URL canonicalization will help you in knowing about different indexed and no-index files.

4. Categorizing Data For Google Website Indexing

We noticed that common indexing problems differ by website size. This is how we divide the data:

1. Small websites (up to 10k pages)

2. Average websites (from 10k to 100k pages)

3. Large websites (up to a million pages)

We had to discover a technique of normalizing data due to the variances in the size of the websites in our sample. A huge website that struggles to overwhelm the challenges that other, lesser websites could have.

So we have carefully looked at every website, to sort out their indexing problems. We then gave points to difficulties related to the indexation depending on the number of pages on a certain website affected by a certain problem.

Final Verdict On Google Page Indexing

Here are the top issues that we have found on the website indexation:

1. Indexing Errors For Better Google Indexing

The first thing to verify is to validate that the index is not already on your page or website. Search operators are the easiest method to check this. These are search keywords you may use to assist searchers to locate more targeted search results in a Google search. For example: use the “site:” operator to validate site indexing:

Website: site

The same operator may also be used to verify a single page:

Page-name:domain.com

If you receive such a result, it signifies that your page is not in the index:

Overall, these are faults that make adding your website or the content to an indexable database tougher for a search engine. Remember to crawl search engine spiders, index, and then classified for material to appear on search outcome pages. If those errors occur, it is not possible to find your content by entering the keywords that you target, which impacts your ranking and minimizes organic traffic.

Since most individuals strive to rank on Google, we will concentrate in the search console on mistakes. In addition, when these problems appear on the search console, the same bugs are likely to be seen by crawlers from other search engines.

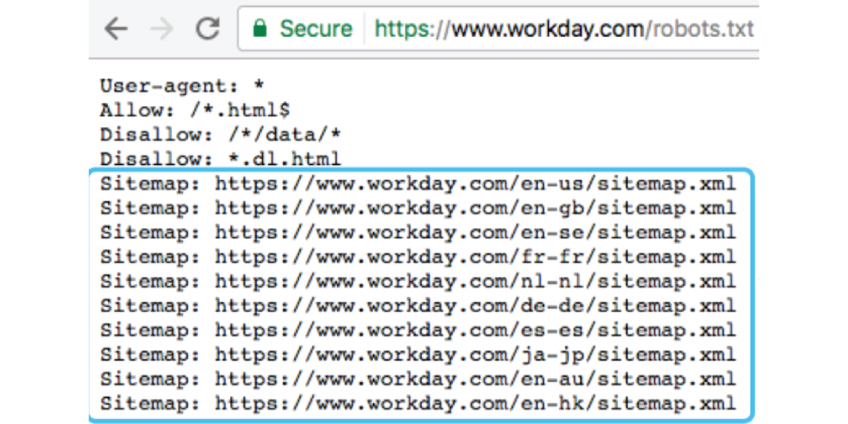

2. Misconfigured Robots.Txt File For Website Indexing

A file robots.txt is used to instruct web spiders on what to crawl. It is normally used to deny your login page access. A wrong setup robots.txt file may include a rule blocking the crawling of bots and spiders on a particular website you desire.

Since your hosting provider may not be able to update this file, it is advisable to discuss the rule with your site developer. Another location where a robot’s directive might block your website is via the robots.txt file. You can rapidly test this using the robots.txt tester tool in Google or you can verify the file by going to robots.txt on your domain.

3. Check Whether Your Page Is Findable For Proper Auditing

To make a page indexed, a crawler must discover it. There are a few methods that crawlers cannot discover your page (and that you can leverage to ensure that they can find it).

If your page isn’t connected or if it’s linked to from a dark portion of the site, crawlers might not locate the connection and may not locate the page. Make sure your site has internal links pointing to the page if you want search engines to access and index it.

An XML map is one of the greatest locations to connect to the page. If the XML sitemap doesn’t include your page, Google will either look at it as not worthwhile or may just find it difficult for you to discover it. To avoid that, ensure that you include sites that are crucial for your XML sitemap to crawl and index.

4. Check The Google Indexing Of Website

You can’t index your entire website sometimes, which occurs if you don’t provide a sitemap. The first thing you should do to have a sitemap is to index your website since search engines follow a logical path when your website is being crammed. Not submitting one provides you bots a difficult time to follow and might leave your website and content.

Happily, it is pretty straightforward to create a sitemap. There are several tools available to aid you. You have plugins to aid you with that if you utilize WordPress like many people. This may be due to manual penalty if your website is suddenly de-indexed. Manual sanctions are rather uncommon, though sometimes occur.

All your pages are deleted from the results of the search. To make matters worse, your brand name is also restricted so that even if you search for it, you cannot discover it. There are various reasons why you are entitled to a manual fee, including spammy backlinks, anchor text, and duplicate content.

There are several typical reasons why you are subject to a manual penalty. One technique to eliminate a manual fine is to remove backlinks and rewrite your anchor text and contents from dubious websites if that’s the basis for the fine. Afterward, to reflect your indexing, you have to contact a specific search engine.

Indexing problems may cause enormous losses and are disastrous. It is, therefore, crucial to understand what these problems are as well as how they may be identified and solved by any website or a business owner.

5. Duplicate Content Avoiding Good Google Indexing

Heard about good content marketing? So, content is still the king and plays the most vital role in Google indexing. Google aims to display the best relevant material for a certain query. Consequently, it devalues content too similar to the stuff already in the index. Some methods for generating duplicate material include:

- Parameters: if you are using parameters, numerous versions with different URLs on the same page may be available

- Facets/Tag Pages: The information is fairly similar, if not identical in many tag or category if you have faced navigation or classification such as a blog/tag/pages;

- Localization: pages unique to the City may have the same content and may require additional distinction for country/languages combinations (or for international variants, the use of hreflang annotations)

- External duplication: if the content is syndicated from another site, content is scrapped, or anything which has been published else, it is probably a duplex (and doing too much of this can send a low-quality content signal)

You also need to confirm that the page does not have a rel-canonical tag that points to a new URL as the main version. This may be checked with the Google Search Console “Inspect URL” tool as given in the pic below:

6. Check Website’s Load Time For Google Website Indexing

While you have a relatively slim website, it won’t normally keep your content from being indexed by Google (although it may hurt your ability to rank well). However, if your website takes considerably longer than usual industry norms, especially if it takes so much time to load, it might be considered a bad user experience by search engines and they may opt not to crawl this site. In that situation, you can simply not access the material.

7. Check For Mobile Accessibility For Better Google Page Indexing

With the prevailing (and eventually the sole) indexing strategy, mobile indexing can only be used by crawlers to view the content of the mobiles if it is available to them. Make sure that the mobile version of your website provides any material you wish to index (and of course, that said mobile version is fast and user-friendly).

8. Check For Google Penalty For Google Website Indexing

You may have been smacked by a manual action if you toying with grey or black hat practices that go counter to Google’s standards. These are also known as “punishments” and occur when the team of Google manually punishes a website for its conduct and techniques.

You should get a notification from the Manual Actions Report of the Google Search Console if you are impacted by a manual action. Typical information about the problem and advised measures on how it is corrected will be included in this notification. You will have to file a review request from inside the report after all impacted pages have been repaired.

9. ‘404 Not Found’ Error If Website Not Showing In Google Search

A 404 error is generated when you can’t find a page on the given URL on your map. To solve this problem, you must go back to the URL check and enter a new tab to examine whether or not this page is available. You can ask for fresh indexing if it is.

If not, you must learn why and correct that problem, or forward the URL to another page. However, if you don’t have to index the page, it’s better to leave it alone, since Google and other search engines will quickly de-index it.

Fixed problems occur if a URL is being redirected to a page not available. Setting them is identical to repairing an unfundable URL. You have to “request indexing” and “validate fix” within your console after fixing the URL or forwarding it to the page that is available to you for indexing the redirect.

10. Crawling Issues For Google Website Indexing

Search engines cost a website very much, and you may start seeing why they might miss indexing information if there is a crawling problem if they index billions of pages daily. Too many broken links or sources such as JavaScript and CSS files that are not correctly downloaded are prevalent problems. The problem might be that you have a sluggish search engine connection from your website.

Fixate Google Indexing Issues

Google currently is so omnipresent that we don’t even see it as a private firm most of the time. The English dictionary of Oxford and Merriam-Webster included “Google” in 2006 as a transitive verb. It is so prevalent that it’s there. And this way of thinking is no surprise given almost 90 percent of its market share.

The difficulty is that we tend to believe, for some reason, that popular nouns are more reliable. Like the Google firm, they were no longer public utilities, which aim merely to benefit their customers, instead of seeking to reconcile it and to profit as a private corporation that is a market leader. Although all that is true, it is what firms prefer to do.

You keep to yourself or sell it over, as it suits your goal. However, they are also able to tune in, not simply accuracy or truth, because of Google’s almost monopoly. Naturally, there is no responsibility for the large search engine to play kind.

As a private enterprise, they don’t have to say how they update their algorithms, whether they have a flaw if their actions or strategy are meant to affect them. Yet an entire industry is striving to figure out exactly these things.

We hope this article helped in giving a better picture in Google website indexation.