Large language models (LLMs) are becoming more popular across enterprise organizations, with many establishing them in their AI models. Foundation models are strong opening moves but require work to build into a ready-for-deployment environment, NVIDIA NIM eases the procedure, allowing organizations to operate AI models anywhere, across the data center, cloud, workstations, and PCs.

Created for enterprises, NIMs offer a full range of prebuilt, cloud-native microservices that integrate into existing frameworks easily, These microservices are constantly controlled and updated, providing extraordinary performance and guaranteeing access to the newest developments in AI inference technology.

What is NVIDIA NIM?

What is NVIDIA NIM?

NVIDIA NIM (NVIDIA Inference Microservices) is a group of easily operated microservices for boosting the deployment of foundation models on any cloud or data center framework. It is created to enhance AI infrastructure for maximum proficiency and productiveness, while also minimizing hardware operational costs. NVIDIA NIM comprises domain-based NVIDIA CUD libraries and unique code customized to different domains like language, speech, video processing, and generative biology chemistry.

Features of NVIDIA NIM’s

Uncomplicated Deployment: NIM makes it easier to use models by turning them into containers and making them work better on NVIDIA hardware. This removes the requirement for manual configuration and makes sure resources are utilized efficiently.

Scalability: NIM can manage and boost deployments across various NVIDIA platforms, involving on-site, cloud, and edge environments. This enables you to effortlessly adjust to changing workloads and data needs.

Monitoring and Management: NIM offers a full suite of tools for monitoring model performance, resource utilization, and health. This allows users to determine and troubleshoot issues rapidly and improve their deployments for maximum proficiency.

Security: NIM provides strong security features to secure your models and data. This involves support for encryption, validation, and authorization.

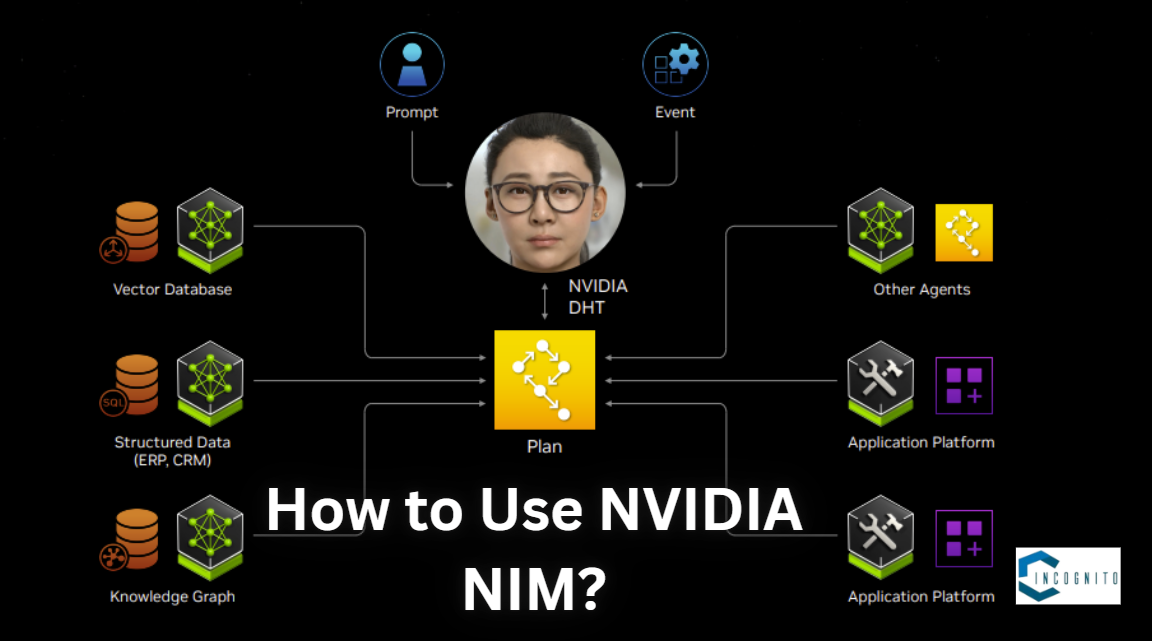

How to Use NVIDIA NIM?

How to Use NVIDIA NIM?

To make the use of NIM, developers can follow these simple steps:

Find AI models in the NVIDIA API Catalog: Developers can use many AI models from the NVIDIA API catalog to make their own AI apps. They can start testing these models right in the catalog using a simple interface or connect directly to the API for free.

Sign Up for NVIDIA Enterprise Evaluation License: To deploy the microservice on their framework, developers are required to sign up for the NVIDIA AI Enterprise 90-day evaluation license.

Download the model: Developers can get the model they want to use from NVIDIA NGC. For instance, they can download a version of the Llama-2 7B model made for one A100 GPU using this command:`ngc registry model download-version “ohlfw0olaadg/ea-participants/llama-2-7b:LLAMA-2-7B-4K-FP16-1-A100.24.01″`.

Open the Downloaded File or Package: Developers can open the downloaded file into model storage by making the use of this command: `tar -xzf llama-2-7b_vLLAMA-2-7B-4K-FP16-1-A100.24.01/LLAMA-2-7B-4K-FP16-1-A100.24.01.tar.gz`.

Deploy the Microservice: Developers can deploy the microservice on their framework by making the use of NVIDIA AI Enterprise 90-day evaluation license.

The future of AI inference: NVIDIA NIMs and Beyond

The future of AI inference: NVIDIA NIMs and Beyond

NVIDIA NIM displays a great advancement in AI inference. As the want for AI-powered applications develops across different industries, deploying these applications skillfully becomes essential. Enterprises that are in want of the transformative power of AI can make use of NVIDIA NIM to easily integrate prebuilt, cloud-native microservices into their existing systems. This allows them to speed up their product launch, helping them stay ahead in innovation.

The future of AI processing extends beyond individual NVIDIA NIMs. As demand for advanced AI applications grows, connecting multiple NVIDIA NIMs will be determining. This network of microservices will allow smarter applications that can operate together and adapt to different tasks, changing the way we use technology. To deploy NIM inference microservices on your framework. you can learn from this guide “A Simple Guide to Deploying Generative AI with NVIDIA NIM“

NVIDIA continuously launches the latest NIMs providing organizations with the most strong AI models to fuel their enterprise applications. Go to the API catalog for the new NVIDIA NIM for LLMs, vision, retrieval, 3D, and digital biology models.

Read More:

Dream Machine AI: Revolutionizing Video Creation with AI in 2024

Exploring ChatGPT No Restrictions: Opportunities and Risks

Voicify AI: Comprehensive overview of features, price, challenges and use cases